Chihye Han (Kelsey)

Department of Cognitive Science Johns Hopkins University chan21@jhu.edu

I am a Ph.D. candidate in Cognitive Science at Johns Hopkins University, advised by Prof. Michael F. Bonner. I study the neural basis of visual experience using computational modeling, neuroimaging, and deep learning approaches. My research focuses on understanding how high-dimensional neural geometries give rise to complex, rich visual perception.

Previously, I received my M.S. from KAIST and B.A. from Carleton College. I have also worked at LG AI Research and Samsung Electronics.

Updates

- Jan 2026: Our paper on individual differences in visual experience published in Current Biology!

- Aug 2025: Presented at CCN 2025 in Amsterdam, Netherlands.

- Mar 2025: New preprint on high-dimensional structure underlying individual differences in naturalistic visual experience.

- Aug 2024: Presented at CCN 2024 in Boston, MA.

- May 2024: Presented at VSS 2024 in St. Petersburg, FL.

Research

|

High-dimensional structure underlying individual differences in naturalistic visual experience

Current Biology How do different brains create unique visual experiences from identical sensory input? We reveal that individual visual experience emerges from a high-dimensional neural geometry across the visual cortical hierarchy. Han, C. & Bonner, M.F. |

|

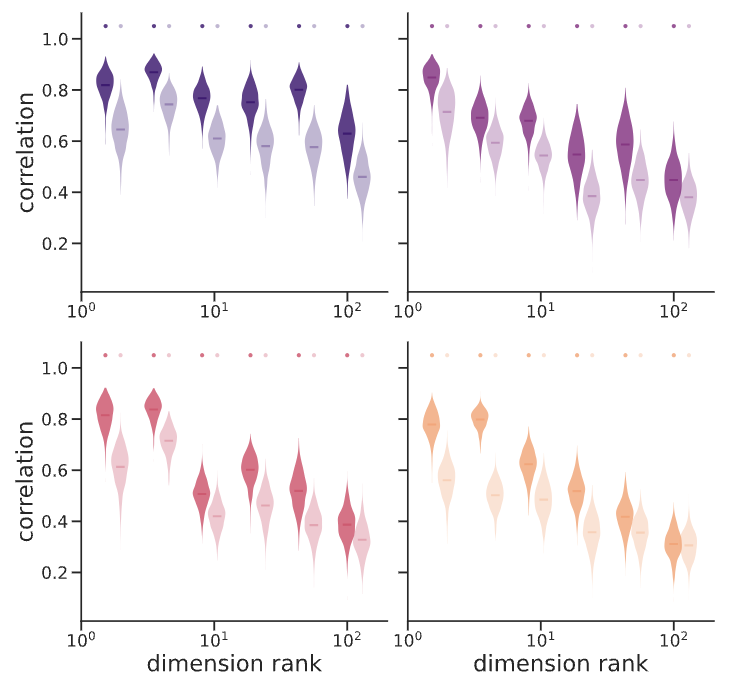

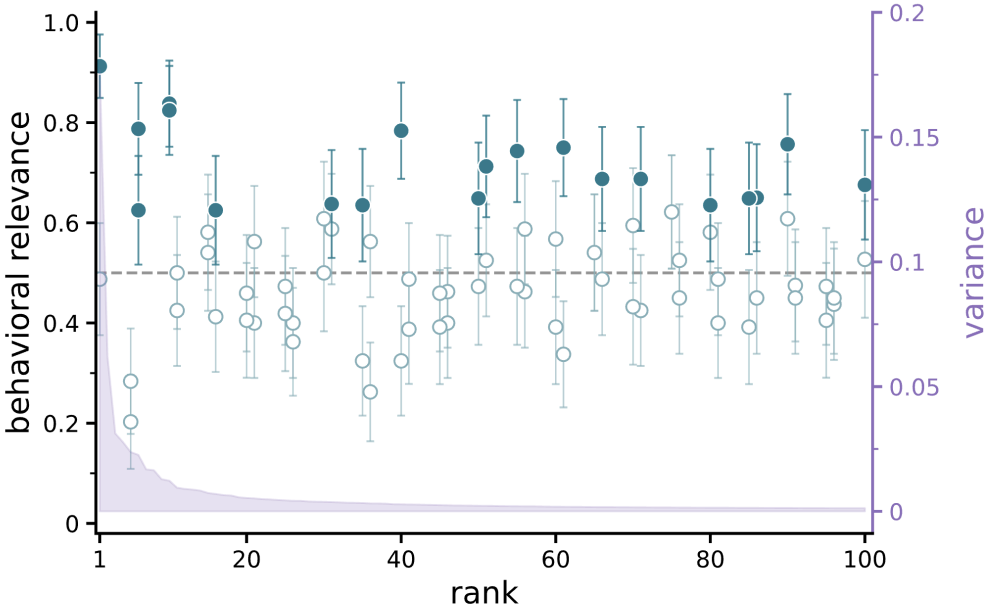

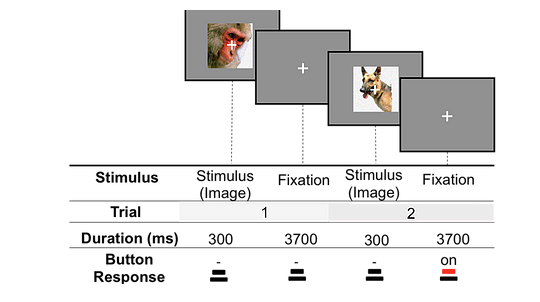

Behavioral relevance of high-dimensional neural representations

Cognitive Computational Neuroscience Does behavioral relevance extend throughout high-dimensional neural representations or is it restricted to interpretable, high-variance dimensions? We show that humans can perceive coherent structure in image clusters formed along principal components spanning the entire spectrum of ventral visual stream responses, suggesting that behaviorally relevant information is distributed across the full range of neural dimensions. Han, C., Gauthaman, R.M., & Bonner, M.F. |

|

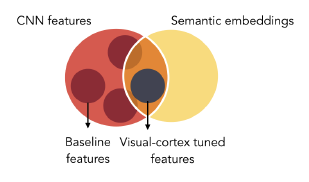

Quantifying the latent semantic content of visual representations

Vision Sciences Society How does visual cortex extract semantic meaning from images? We developed a statistical measure called semantic dimensionality that quantifies the number of language-derived semantic properties that can be decoded from image-computable perceptual features. Han, C., Magri, C., & Bonner, M.F. |

|

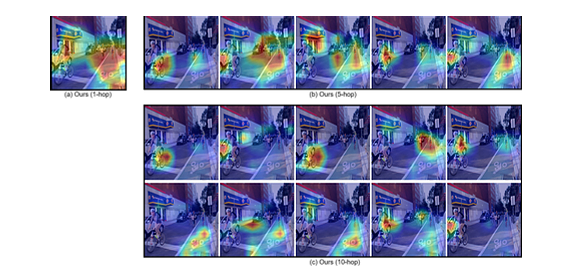

MHSAN: Multi-head self-attention network for visual semantic embedding

WACV We propose a multi-head self-attention network to attend to various components of visual and textual data, achieving state-of-the-art results in image-text retrieval tasks. Park, G., Han, C., Yoon, W., & Kim, D. |

|

Representation of adversarial examples in humans and deep neural networks: an fMRI study

IJCNN We compare the visual representations of adversarial examples in deep neural networks and humans using fMRI and representational similarity analysis. Han, C., Yoon, W., Kwon, G., Nam, S., & Kim, D. |

|

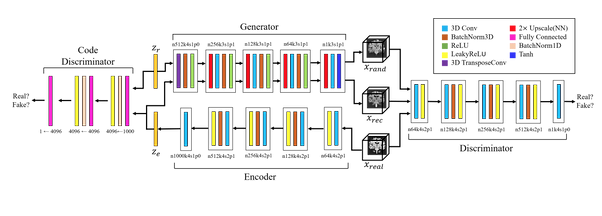

Generation of 3D brain MRI using auto-encoding generative adversarial networks

MICCAI We generate realistic brain MR images of multiple types and modalities from scratch using alpha-GAN with WGAN-GP. Kwon, G., Han, C., & Kim, D. |